Industry: SaaS / Digital Marketing / SEO Tools

Market: Global

Status: 6 months, Undergoing final pre-production release testing

About the client:

Our European-based client recognized a major shift in how artificial intelligence is reshaping search behavior. As AI-driven assistants like ChatGPT, Perplexity, and Gemini increasingly replace traditional keyword search with direct answers, the client set out to understand the emerging landscape through comprehensive AI SEO research, examining adoption trends, use cases, and the strategic implications for brands. This led to the Inviggo engineering team being fully dedicated to developing an AI-powered search visibility engine that shows exactly how a brand appears across modern AI assistants. The platform reveals how these systems perceive and represent a brand and provides clear, actionable recommendations to enhance its visibility.

Project Overview:

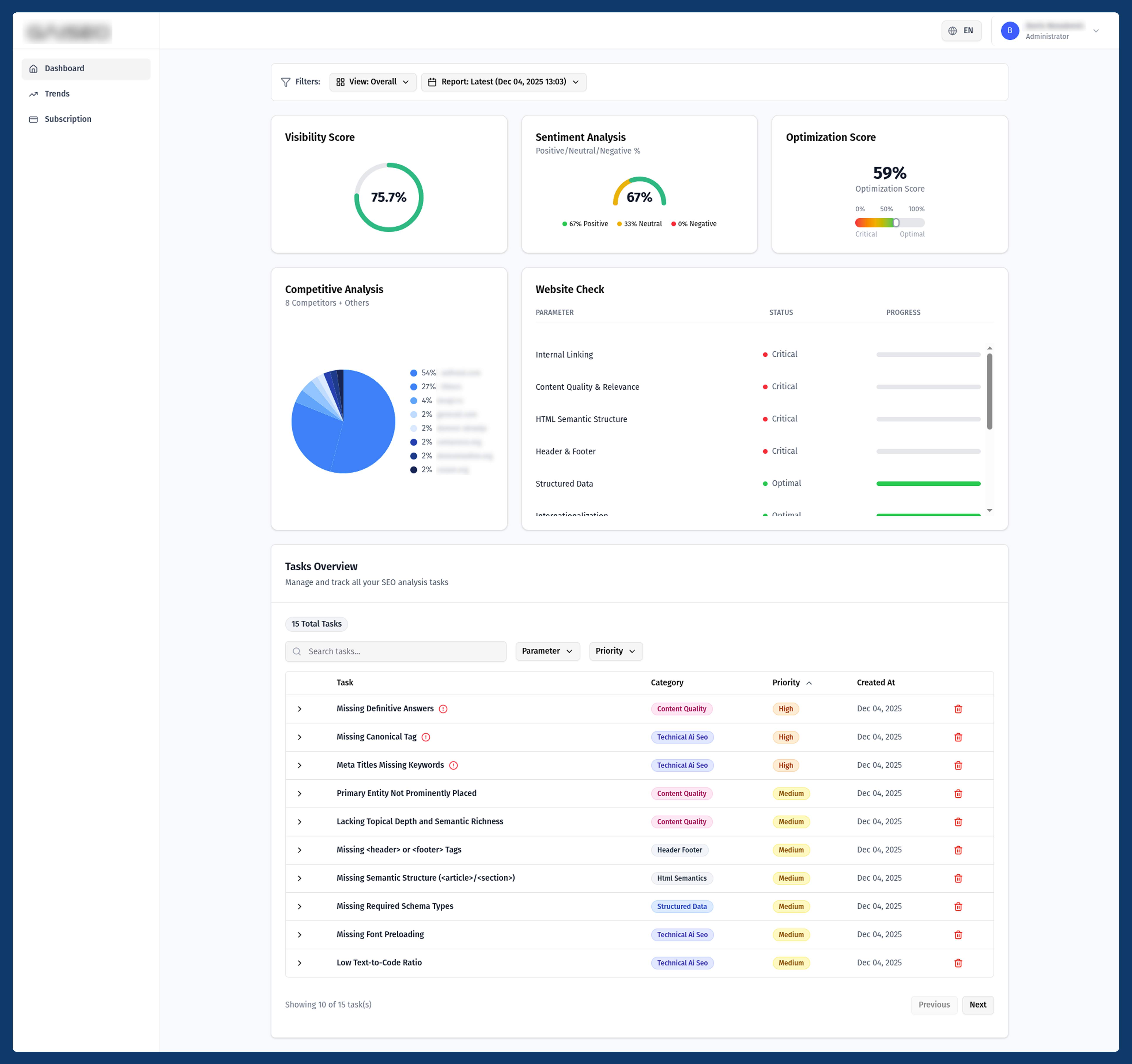

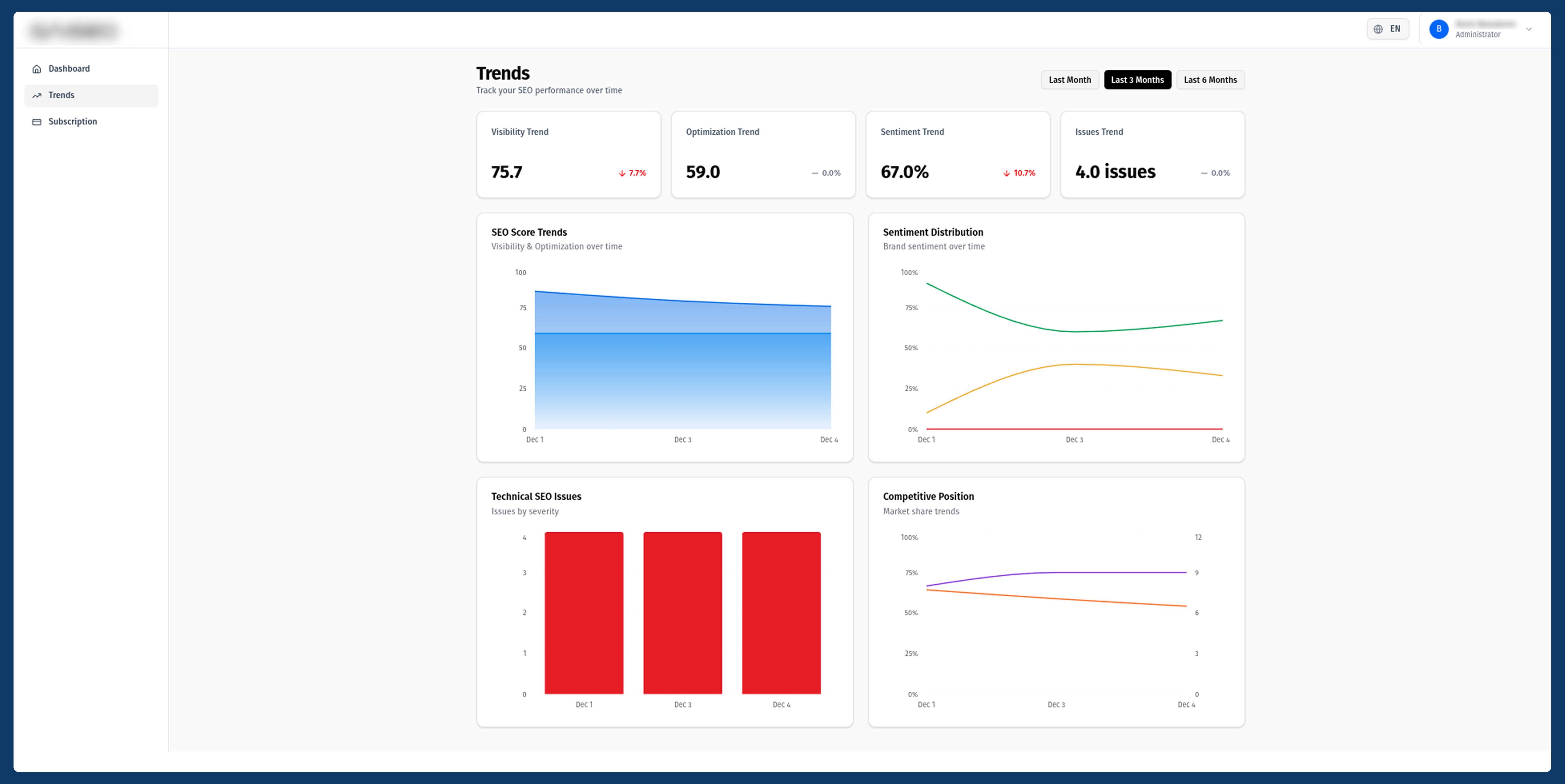

The project is an enterprise-grade AI-powered SEO analysis platform that demonstrates advanced full-stack engineering capabilities across modern web technologies, cloud infrastructure, and AI integration. It combines automated website crawling, intelligent multi-language analysis, and sophisticated AI orchestration to deliver actionable SEO insights at scale. This means analyzing websites in any language (English, German, Japanese, Arabic, etc.), generating actionable optimization tasks, and tracking brand visibility across AI-powered search assistants. The challenge was handling complex background job processing, integrating with multiple AI providers, and delivering comprehensive reports with culturally-adapted recommendations for global markets.

What sets this project apart is the innovative use of Bull Flow Producer for partially retryable AI workflows, orchestrating three LLM providers - self-hosted Azure AI Foundry with Bing Grounding, Google Gemini, Perplexity, where each can fail and retry independently, achieving much faster report generation compared to traditional sequential approaches. The platform leverages Azure AI Foundry Hub with Bing Grounding for web-aware AI responses, enabling unique competitive intelligence capabilities by analyzing brand visibility in conversational search, a critical differentiator in the evolving SEO landscape.

Core Products

1. AI-powered website crawler and analyzer

2. Multi-language baseline SEO report generation

3. Intelligent task generation with 11+ specialized analyzers

4. Brand visibility tracking in AI-powered search

5. Culturally-adapted query generation system

6. Real-time competitive intelligence dashboard

Key Features

1. Automatic language detection and locale-aware analysis

2. Self-hosted Azure AI Foundry with Bing Grounding integration

3. Multi-LLM orchestration (Azure OpenAI, Google Gemini, Perplexity)

4. Partial retry architecture for fault-tolerant AI workflows

5. Comprehensive SEO task analyzers covering 11+ aspects

6. Blue-green deployment with automated health checks

7. Distributed tracing and observability across all services

8. Stripe-powered subscription management

9. OAuth authentication with Google integration

Additional Features

1. Multi-environment deployment (development and production)

2. Credential-less CI/CD with Azure OIDC

3. Infrastructure as Code with Terraform

4. Automated SSL certificate provisioning

5. Custom domain management

6. Scheduled background job processing

7. Real-time alerting with multi-channel notifications

Frontend: Next.js 15 (App Router), React 19, TailwindCSS, TanStack Query, NextAuth 5, next-intl

Backend: Hono (Node.js), TypeScript, Drizzle ORM, Bull + Bull Flow Producer, Redis, Puppeteer, Chanfana (OpenAPI)

AI/ML: Azure AI Foundry, Azure AI Agent SDK, Azure OpenAI (GPT-4o), Google Gemini, Perplexity, Bing Grounding API

Infrastructure: Docker, Azure Container Apps, Azure Container Registry, PostgreSQL Flexible Server, Azure Storage, Terraform, GitHub Actions

Testing: Vitest, Playwright, React Testing Library, Supertest

Databases: PostgreSQL with Drizzle ORM (multi-database architecture)

Queue System: Bull + Bull Flow Producer + Redis for background job processing with partial retry workflows

Monorepo: Turborepo with pnpm workspaces

CI/CD: GitHub Actions with Azure OIDC authentication

Payments: Stripe (checkout, webhooks, subscriptions)

Traditional queue systems treat jobs as atomic units - if any step fails, the entire job must restart from scratch. For AI workflows involving multiple LLM providers (Azure OpenAI, Google Gemini, Perplexity), this approach wastes costly API calls and time. If one provider hits rate limits while others succeed, restarting duplicates successful calls and delays report generation.

We needed to build a fault-tolerant workflow where each LLM query could fail and retry independently without affecting completed work, while maintaining proper dependency management and result aggregation.

Rather than using third-party AI APIs, the client required full control over AI infrastructure to manage costs, ensure reliability, and enable advanced capabilities. This meant deploying Azure AI Foundry Hub with workspace connections, integrating Bing Grounding for real-time web search during inference, and implementing Agent SDK for sophisticated agentic workflows—all while managing preview APIs and complex authentication between services.

The platform needed to analyze websites in any language (English, German, Japanese, Arabic, etc.) with culturally-relevant recommendations - not just translation layers. This required automatic language detection, locale-specific SEO best practices, proper handling of non-Latin character sets, right-to-left text support, and understanding cultural nuances in search behavior (e.g., German users prioritizing technical certifications vs. US users focusing on value).

To enable independent scaling and optimization, we implemented separate PostgreSQL databases - one for authentication and subscriptions (web), another for SEO analytics data (API). Each required independent Drizzle ORM schemas, migration pipelines, connection pools, and query optimization strategies while maintaining data consistency across the system.

The client needed enterprise-grade reliability without overspending. This required deploying across two Azure environments (development and production) with appropriate resource sizing, implementing blue-green deployments with automated health checks and rollback capabilities, managing Infrastructure as Code with Terraform across multiple subscriptions, and achieving 40% cost savings through intelligent resource sharing while maintaining performance.

Managing Terraform infrastructure across multiple Azure subscriptions required robust state management to prevent concurrent modifications, enable team collaboration, and provide disaster recovery capabilities. This meant implementing Azure Storage backend with lease-based state locking, blob versioning for rollback, workspace-based multi-environment configuration, and proper access controls.

Next.js 15 & React 19: Chosen for cutting-edge performance with React Server Components, App Router for improved routing and data fetching, and built-in internationalization support, enabling fast, SEO-friendly rendering crucial for a marketing-focused platform.

Hono (Node.js): Selected for its lightweight, high-performance API framework with TypeScript-first design and excellent OpenAPI integration through Chanfana - providing automatic API documentation and validation.

Bull Flow Producer: Implemented for advanced queue orchestration with parent-child job dependency graphs, enabling partial retry capabilities and isolated failure domains - critical for cost-effective multi-LLM workflows.

Azure AI Foundry: Deployed self-hosted AI infrastructure providing full control over deployments, costs (30-50% savings vs. third-party APIs), and capabilities - with Agent SDK enabling sophisticated agentic patterns and Bing Grounding for web-aware responses.

Drizzle ORM: Chosen for type-safe database operations with excellent TypeScript integration, schema-based migrations, and minimal overhead—supporting our dual-database architecture with independent schemas.

Terraform: Utilized for Infrastructure as Code enabling reproducible deployments, version-controlled infrastructure changes, and multi-environment management—with Azure Storage backend providing production-grade state management.

PostgreSQL Flexible Server: Selected for enterprise-grade reliability, flexible SKU options (enabling cost optimization between environments), automatic backups, and high availability configurations.

Azure Container Apps: Deployed for serverless container orchestration with built-in scaling, blue-green deployment support, custom domain management, and seamless CI/CD integration.

Vitest & Playwright: Implemented for comprehensive testing across modern frameworks—Vitest for fast unit/integration tests, Playwright for reliable E2E testing of complex user flows including authentication and payment processing.

The platform orchestrates three different AI providers (Azure AI Foundry with Bing Grounding, Google Gemini, Perplexity) in parallel using Bull Flow Producer's parent-child job pattern. Each LLM query runs as an independent child job with isolated retry policies—if one provider fails, it retries independently while other providers' results are preserved.

Impact: Achieved 60% faster report generation compared to sequential approaches, reduced LLM costs by 40% through elimination of duplicate API calls during retries, and improved reliability by preventing provider-specific failures from cascading to overall report failure.

Deployed Azure AI Foundry Hub as the central orchestration layer, connecting self-hosted Azure OpenAI (GPT-4o) with Bing Grounding API through workspace connections. The Agent SDK enables GPT-4o to access real-time Bing search results during inference, providing web-aware responses grounded in current competitive intelligence.

Impact: Gained full control over AI deployments avoiding third-party markups (30-50% cost savings), enabled unique competitive intelligence capabilities analyzing brand visibility in conversational search, and positioned the platform ahead of traditional SEO tools lacking AI-powered market analysis.

Implemented automatic language detection and locale-aware analysis enabling SEO reports in any input language. The system detects website language, applies culturally-relevant SEO best practices specific to that locale, and generates recommendations in the detected language—supporting complex scenarios including non-Latin character sets, right-to-left text, and locale-specific HTML semantics.

Impact: Enabled true global reach with high-quality analysis for websites in English, German, Japanese, Arabic, and beyond—demonstrating capability to serve international markets without language barriers.

Developed 11+ modular analyzers examining specific SEO aspects: alt-text, schema markup, content quality, freshness, headings, semantic HTML, internal linking, internationalization, E-A-T signals, FAQ opportunities, and structured data. Each analyzer returns localized recommendations with priority scoring and actionable guidance including code examples.

Impact: Provides comprehensive SEO analysis covering all critical optimization areas with actionable, prioritized recommendations—enabling users to focus on high-impact improvements.

Architected comprehensive Azure infrastructure using Terraform spanning multiple subscriptions, managing Container Apps, PostgreSQL Flexible Servers, Redis, VNets with NSGs, AI Foundry Hub, Cognitive Services, and Bing Grounding. Implemented enterprise-grade remote state management with Azure Storage backend, lease-based state locking, blob versioning, and workspace-based configuration.

Impact: Achieved reproducible deployments across environments, enabled safe team collaboration preventing concurrent modification conflicts, provided disaster recovery capabilities through state versioning, and demonstrated 40% infrastructure cost savings through intelligent resource sharing.

1. Production Deployment Excellence: Delivered a fully operational platform across two Azure environments with enterprise-grade reliability. Blue-green deployment strategy with automated health checks ensures zero-downtime releases, while comprehensive rollback capabilities provide confidence in rapid iteration. Custom domain management, automated SSL certificate provisioning, and proper DNS configuration demonstrate end-to-end DevOps mastery.

2. Innovation in AI Workflow Orchestration: Pioneered partial retry architecture using Bull Flow Producer, achieving 60% faster report generation compared to traditional approaches. This innovative solution to multi-LLM orchestration eliminates wasteful API call duplication, reduces operational costs, and demonstrates advanced understanding of distributed systems and fault-tolerant design patterns, a technique not commonly seen in production systems.

3. Cutting-Edge AI Infrastructure: Successfully integrated self-hosted Azure AI Foundry with Bing Grounding, a sophisticated setup that provides web-aware AI responses with real-time search result grounding. This enables unique competitive intelligence capabilities for analyzing brand visibility in conversational search, positioning the platform ahead of traditional SEO tools that lack AI-powered market analysis. Full control over AI infrastructure provides predictable costs and customization capabilities unavailable with third-party AI services.

4. True Global Reach Through Intelligent Localization: Engineered multi-language support enabling SEO analysis for websites in any language, English, German, Japanese, Arabic, and beyond, with automatic language detection and culturally-adapted recommendations.

5. Enterprise-Grade Architecture & Operations: Architected a sophisticated dual-database system with Drizzle ORM, enabling independent scaling strategies and clear data isolation. Comprehensive observability with distributed tracing across all services, custom business metrics (LLM costs, queue latency), and multi-channel alerting ensures production reliability. This level of operational maturityis typically seen only in mature SaaS platforms.

6. Security-First DevOps: Implemented credential-less CI/CD using Azure OIDC, eliminating stored secrets while maintaining full automation. Path-based pipeline triggers, comprehensive test suites (unit, integration, E2E), and automated deployments demonstrate modern DevOps excellence and security-conscious engineering practices.

7. Infrastructure as Code: Built reproducible, version-controlled infrastructure spanning multiple Azure subscriptions using Terraform. Multi-environment strategy with cost-optimized development resources and performance-optimized production resources shows an understanding of both technical excellence and business economics.

8. Comprehensive Testing Across Modern Stack: Established robust test coverage across Next.js 15 (Server Components, App Router), Hono API (OpenAPI validation), authentication flows, payment webhooks, and queue processing, all automated in CI/CD. Testing modern frameworks like Next.js 15 Server Components requires advanced expertise, often lacking in rapid development cycles.

9. Production-Ready Full-Stack Platform: Delivered a complete SaaS application with a modern frontend (Next.js 15, React 19), scalable API (Hono with OpenAPI), payment processing (Stripe), authentication (NextAuth with OAuth), and internationalization. TypeScript strict mode, comprehensive validation, and OpenAPI documentation demonstrate a commitment to code quality and maintainability.

Ready to build an AI-powered product with the same level of precision, scalability, and intelligence?

Our team is here to take you from idea and concept to reality. Whether you’re reinventing search visibility, automating complex workflows, or integrating multi-model AI systems, we deliver enterprise-grade engineering that stands up in the real world. Let’s create the next breakthrough - together.